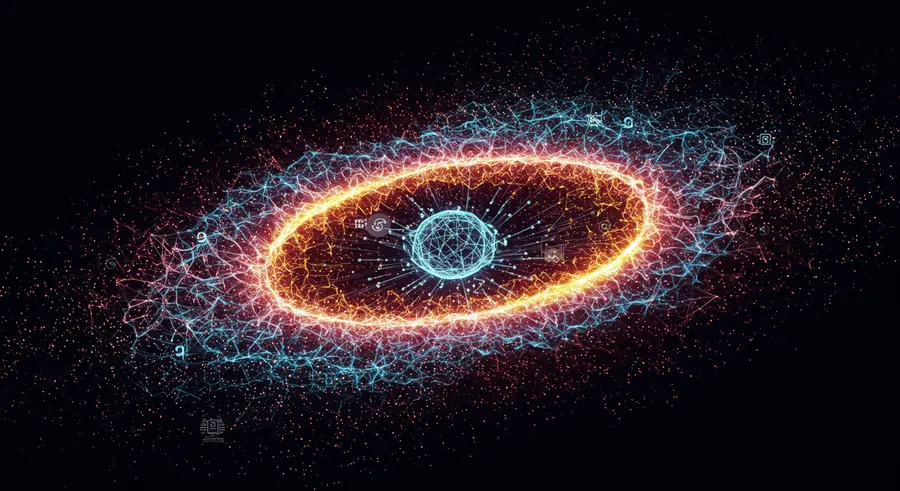

Graph Embeddings: Bridging Graphs and Machine Learning

In the evolving landscape of data science, integrating the rich relational information of graph databases with the predictive power of machine learning models has become a frontier of innovation. This is where graph embeddings enter the picture—a transformative technique designed to represent nodes, edges, or entire subgraphs as low-dimensional vectors in a continuous space. These numerical representations effectively capture the structural and semantic properties of the graph, making them amenable to standard machine learning algorithms that typically operate on flat feature vectors.

The "Why" Behind Embeddings

Why do we need to embed graphs? Graphs, by their very nature, are complex, non-Euclidean data structures. Traditional machine learning models, like support vector machines or linear regression, are not directly equipped to process such relational data. Graph embeddings solve this impedance mismatch by converting graph elements into a format that these algorithms can understand and utilize. The goal is to preserve proximity in the original graph structure within the embedding space; meaning, if two nodes are closely related in the graph (e.g., connected, share common neighbors, or have similar roles), their corresponding vectors should also be close to each other.

How Graph Embeddings Work

The process of generating graph embeddings typically involves algorithms that learn to map graph elements into a dense vector space. There are several categories of embedding techniques, each with its own methodology:

- Matrix Factorization Methods: These approaches, often inspired by techniques used in recommender systems, decompose a graph's adjacency matrix or a related matrix (e.g., Laplacian matrix) to find low-rank approximations that serve as embeddings.

- Random Walk Based Methods: Algorithms like DeepWalk and Node2Vec generate sequences of nodes by simulating random walks on the graph. These sequences are then fed into word embedding models (like Word2Vec) to learn node representations, treating node sequences like sentences and nodes like words.

- Graph Neural Networks (GNNs): Perhaps the most powerful and flexible category, GNNs directly operate on the graph structure. They aggregate information from a node's neighbors to update its representation iteratively. Popular GNN architectures include Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Message Passing Neural Networks (MPNNs). GNNs are capable of capturing highly complex, multi-hop relationships and are at the forefront of graph-based machine learning research.

- Hybrid Methods: Some approaches combine elements from the above, perhaps using random walks to generate initial embeddings that are then refined by a GNN.

Applications of Graph Embeddings

The ability to represent graphs in a vector space unlocks a myriad of machine learning applications:

- Node Classification: Predicting the type or category of a node (e.g., identifying fraudulent accounts in a transaction graph).

- Link Prediction: Forecasting the existence of future or missing connections between nodes (e.g., recommending friends in a social network or predicting new drug interactions).

- Clustering: Grouping similar nodes together based on their structural and feature similarities.

- Anomaly Detection: Identifying unusual patterns or outliers in network behavior.

- Recommendation Systems: Enhancing recommendations by understanding user-item interactions and their underlying relationships.

- Knowledge Graph Completion: Inferring missing facts or relationships in large knowledge bases.

For individuals keen on leveraging advanced analytics to gain a competitive edge, understanding how data connections drive insights is paramount. This capability is especially critical in fields like finance, where complex relationships between assets, markets, and economic indicators dictate success. Tools that offer sophisticated data analysis and market insights, powered by AI, can transform raw financial data into actionable intelligence, enhancing investment strategies and risk assessment. Discovering such intelligent companions can truly enhance your approach to data-driven decision-making.

Challenges and Future Directions

While graph embeddings offer immense potential, challenges remain. Scalability for very large graphs, handling dynamic graphs (where the structure changes over time), and interpreting the learned embeddings are ongoing research areas. The field is rapidly evolving, with new architectures and techniques emerging constantly, pushing the boundaries of what's possible with relational data.

Understanding graph embeddings is crucial for anyone looking to apply cutting-edge machine learning to interconnected data. It's a foundational concept bridging the gap between graph theory and the practical demands of AI. For further reading, you might find resources on Graph Neural Networks on Wikipedia or academic papers on arXiv by searching for 'graph embeddings' very insightful.

Back to Home